I am a Ph.D. candidate at the

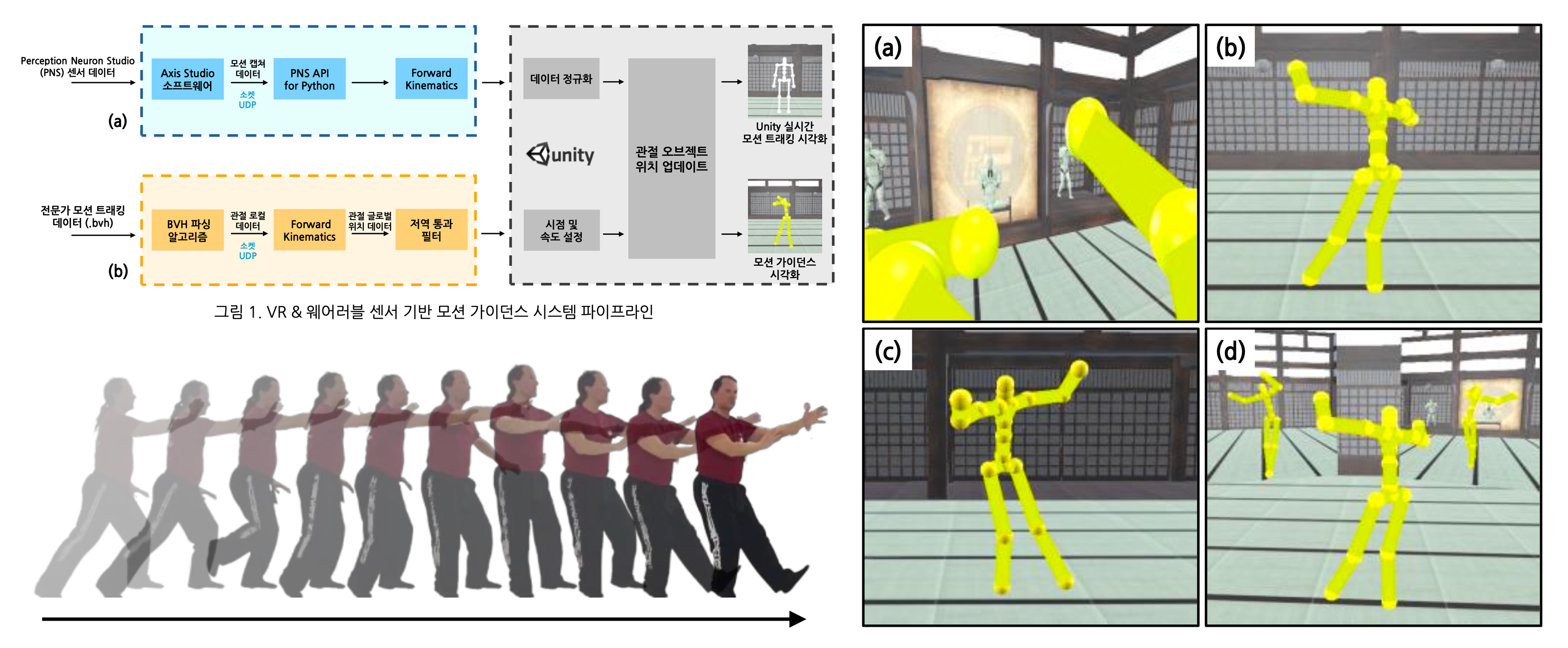

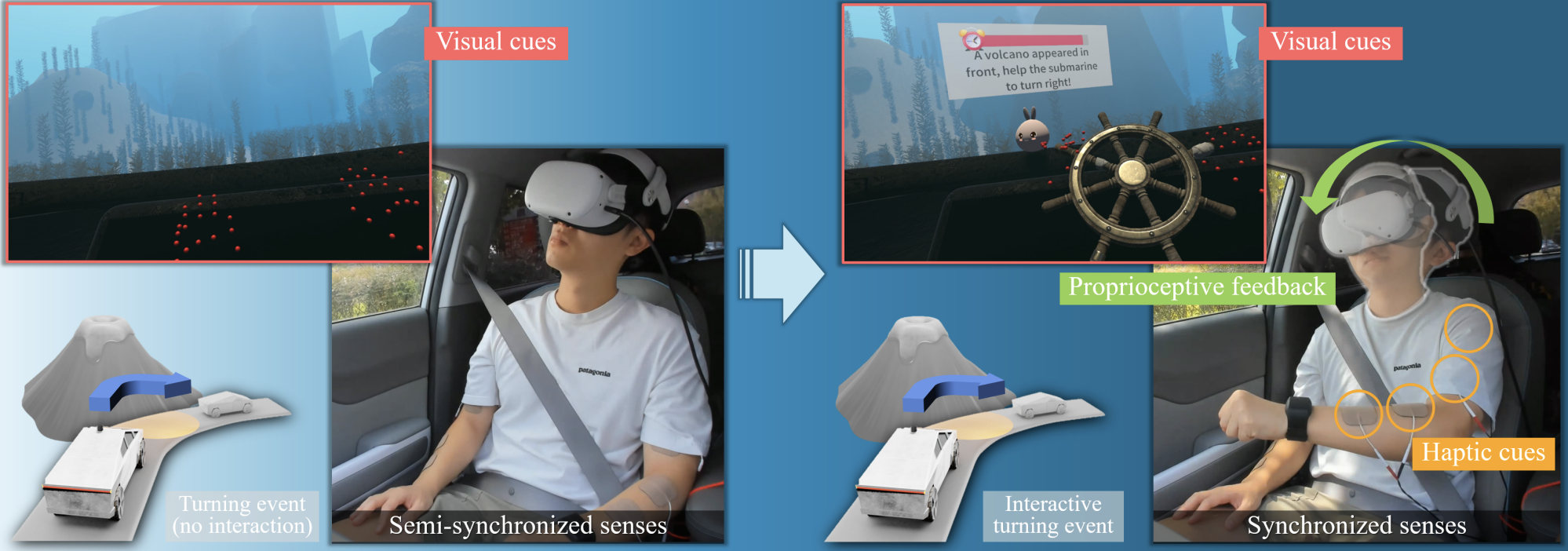

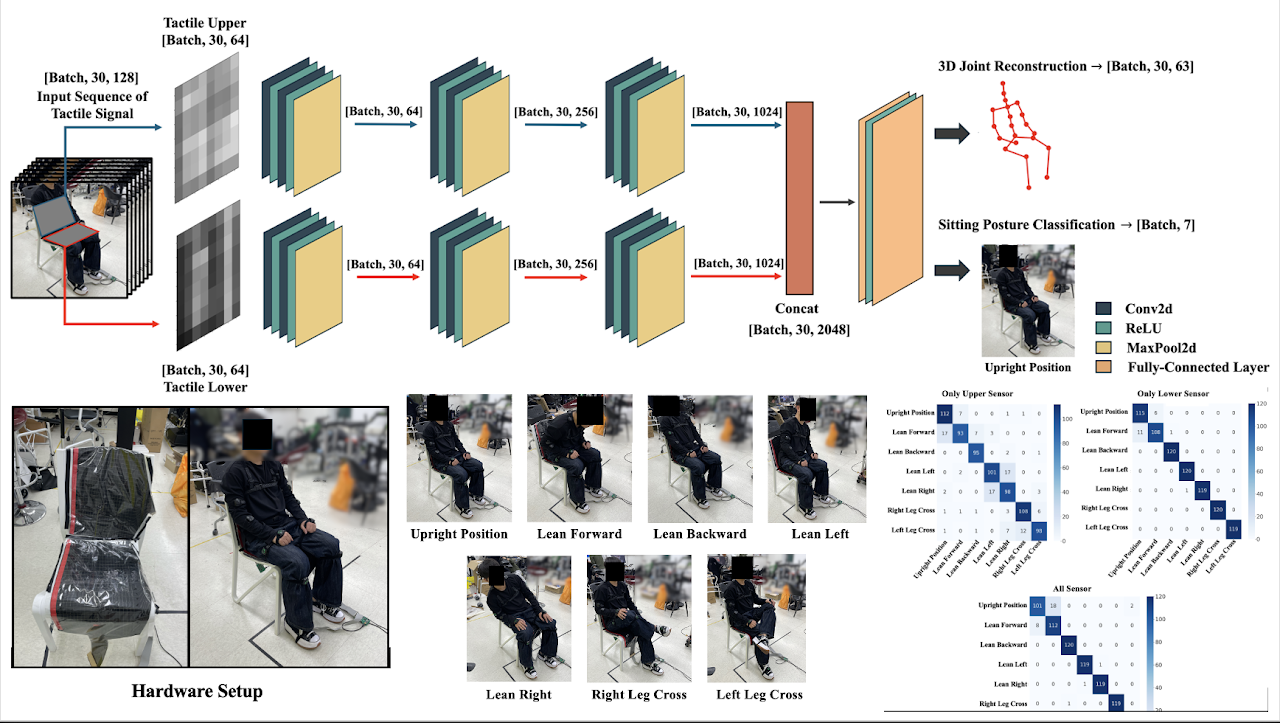

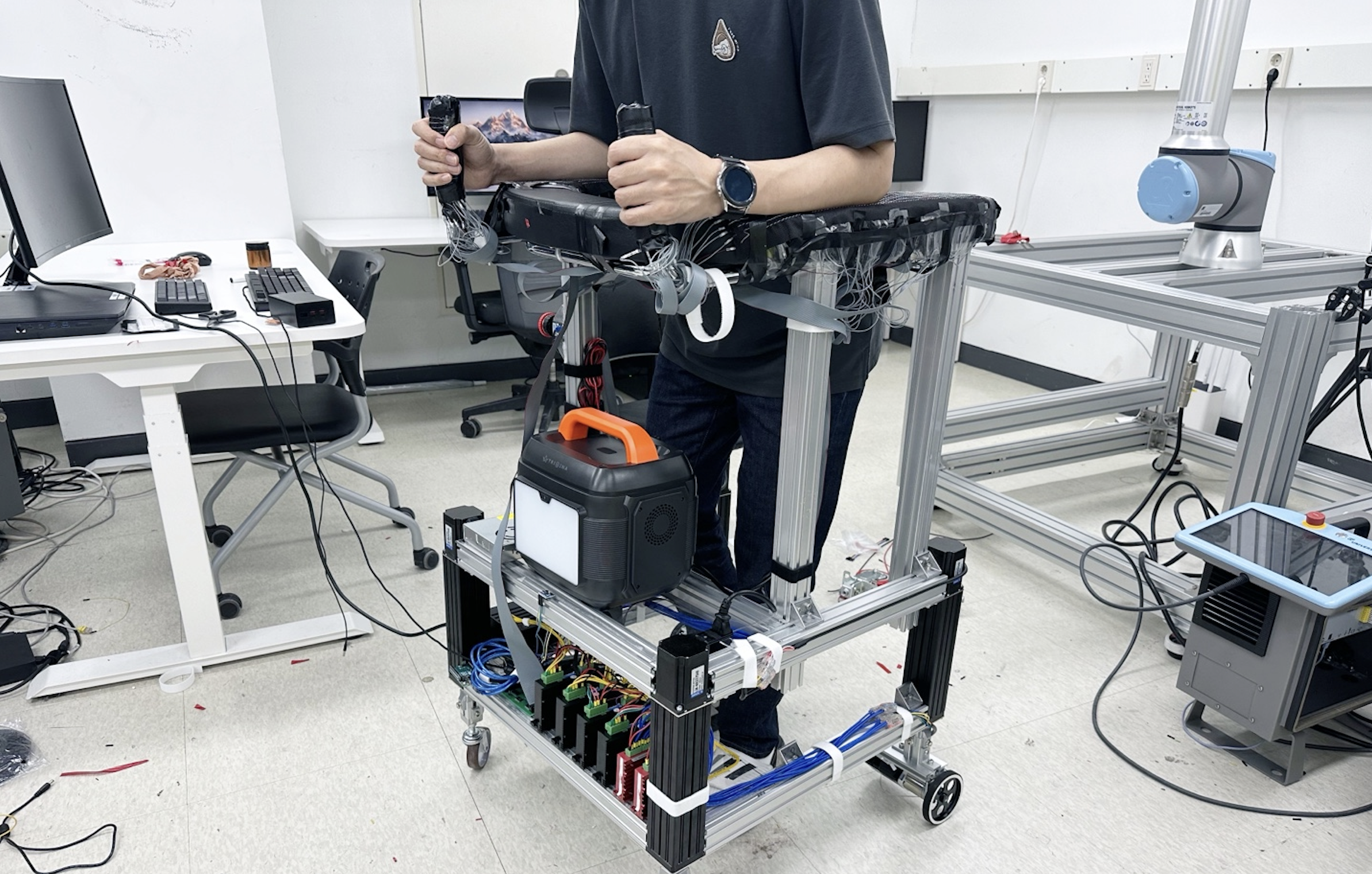

I design interactive AI systems that respond to the human body and behavior in real time. My work combines multimodal sensing—including muscle activity, movement, gaze, and physiological states—with adaptive feedback to support learning, engagement, and decision-making. I apply this approach across domains such as sports training, accessibility for children with Autism Spectrum Disorder, and human-vehicle interaction in autonomous driving. In these contexts, where expert instruction or hands-on experience may be limited, I aim to build systems that lower barriers to learning and promote embodied, inclusive, and personalized interaction. While it is just a dream for now, I hope to one day launch the Sensorimotor Multimodal Wearable (SMW) Lab to continue studying how sensing and feedback technologies can serve diverse learners through embodied, adaptive interaction.

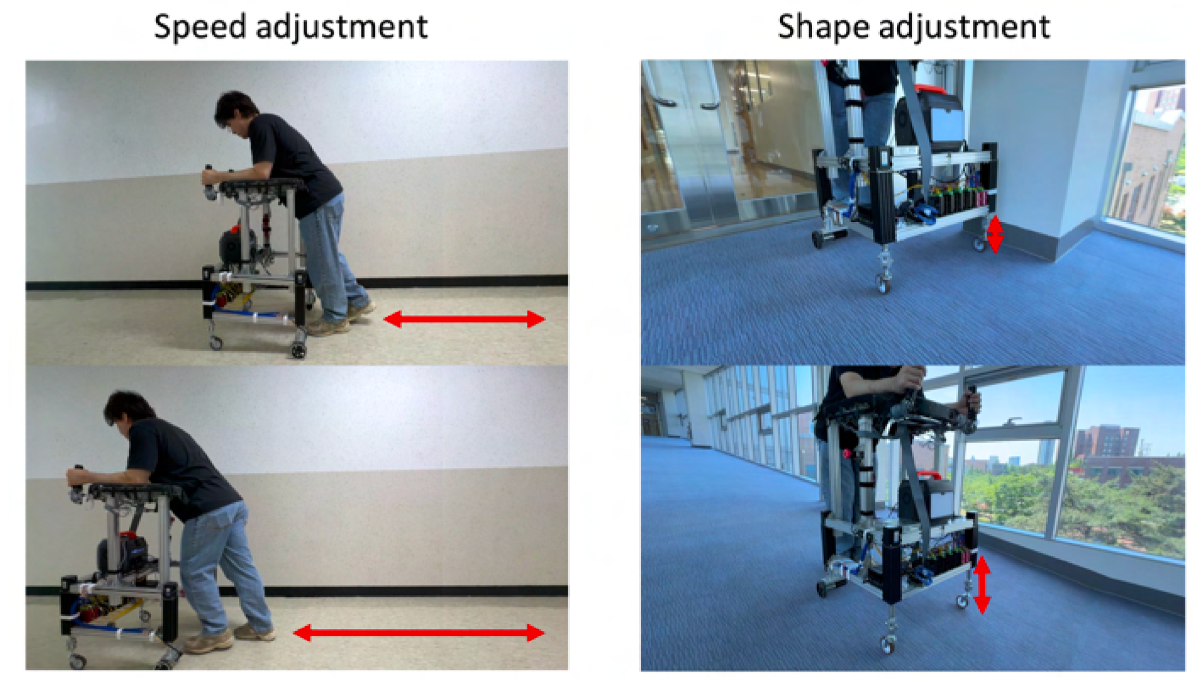

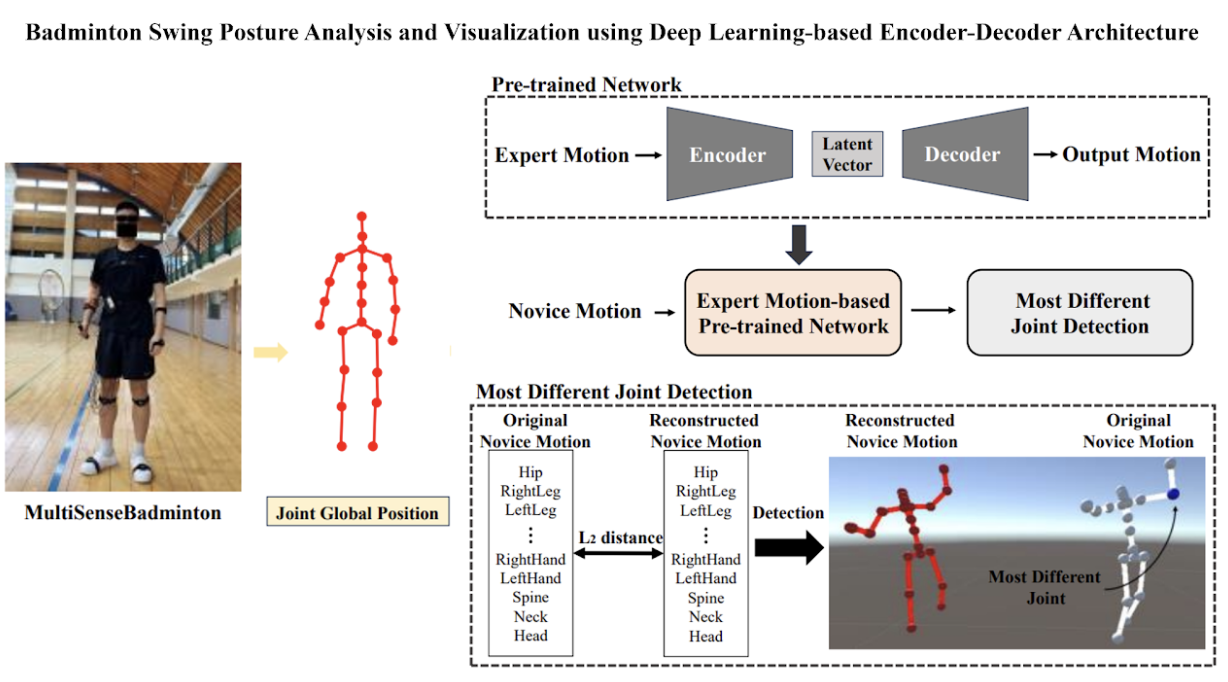

SportsHCI: AI-guided motion and muscle feedback, Racket sports coaching systems, Sensor-based motor learning

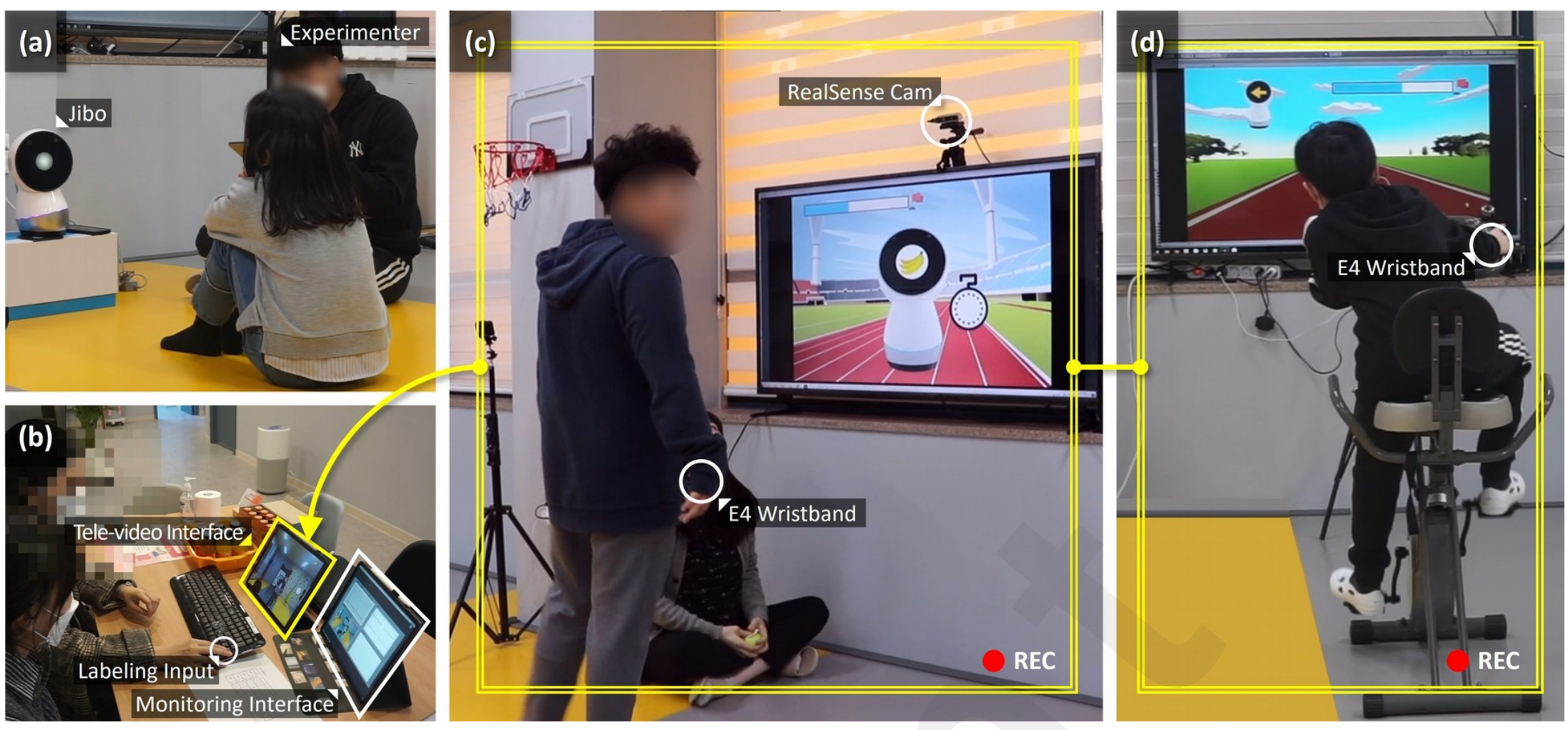

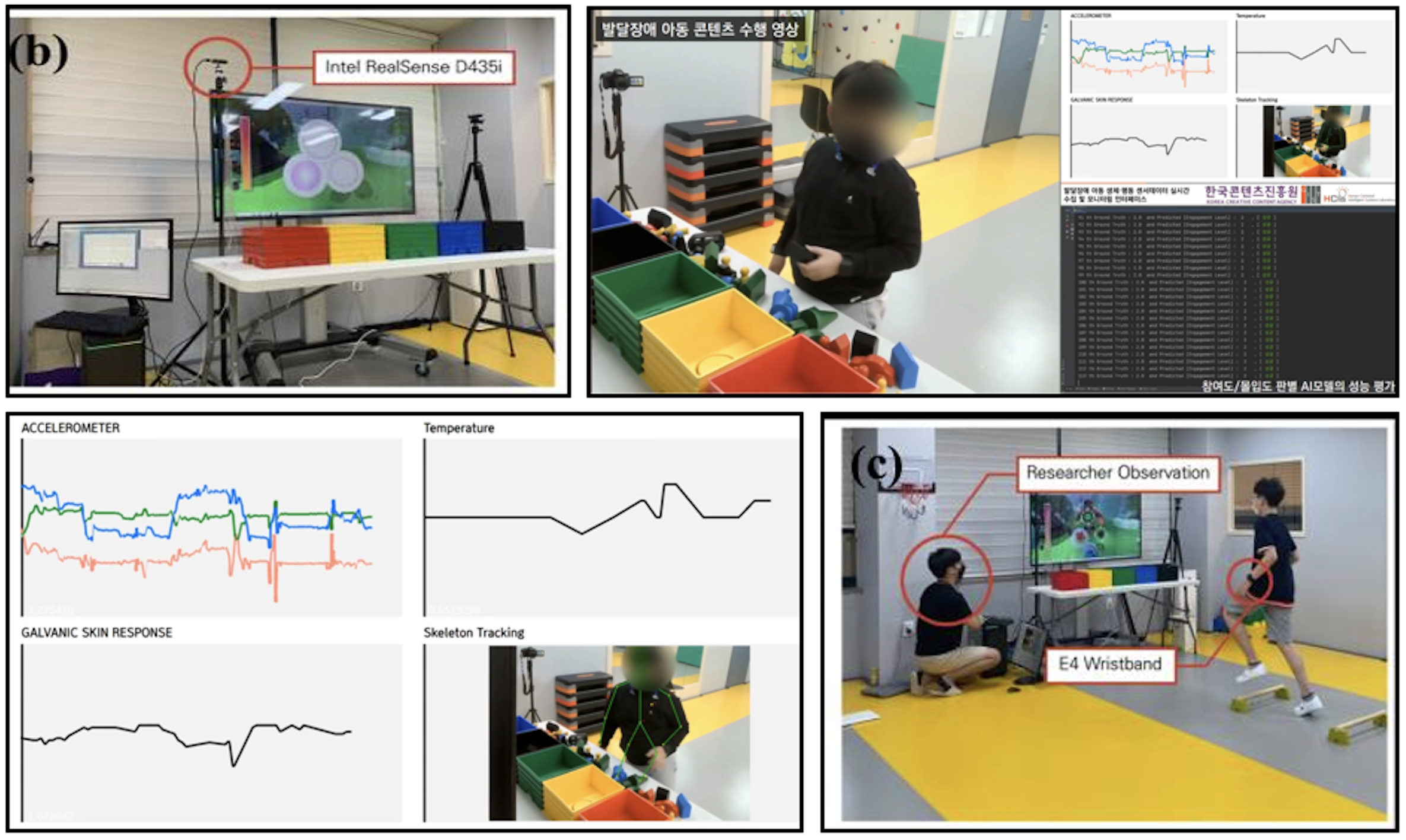

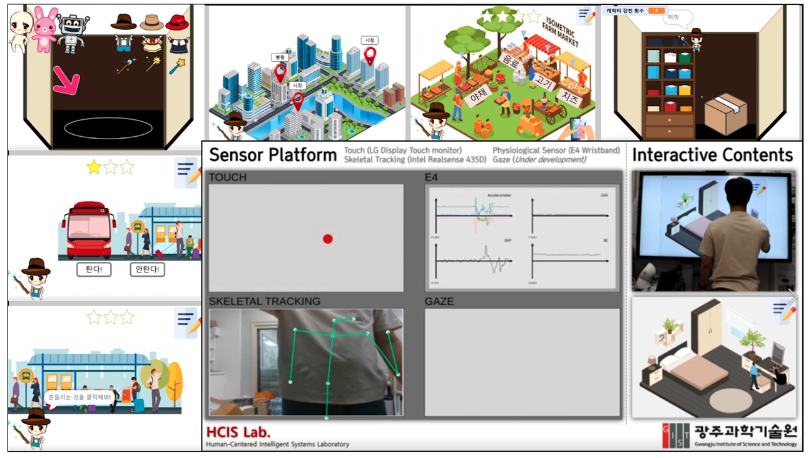

A11y: Engagement modeling for Autism Spectrum Disorder, Deep learning for behavioral intervention

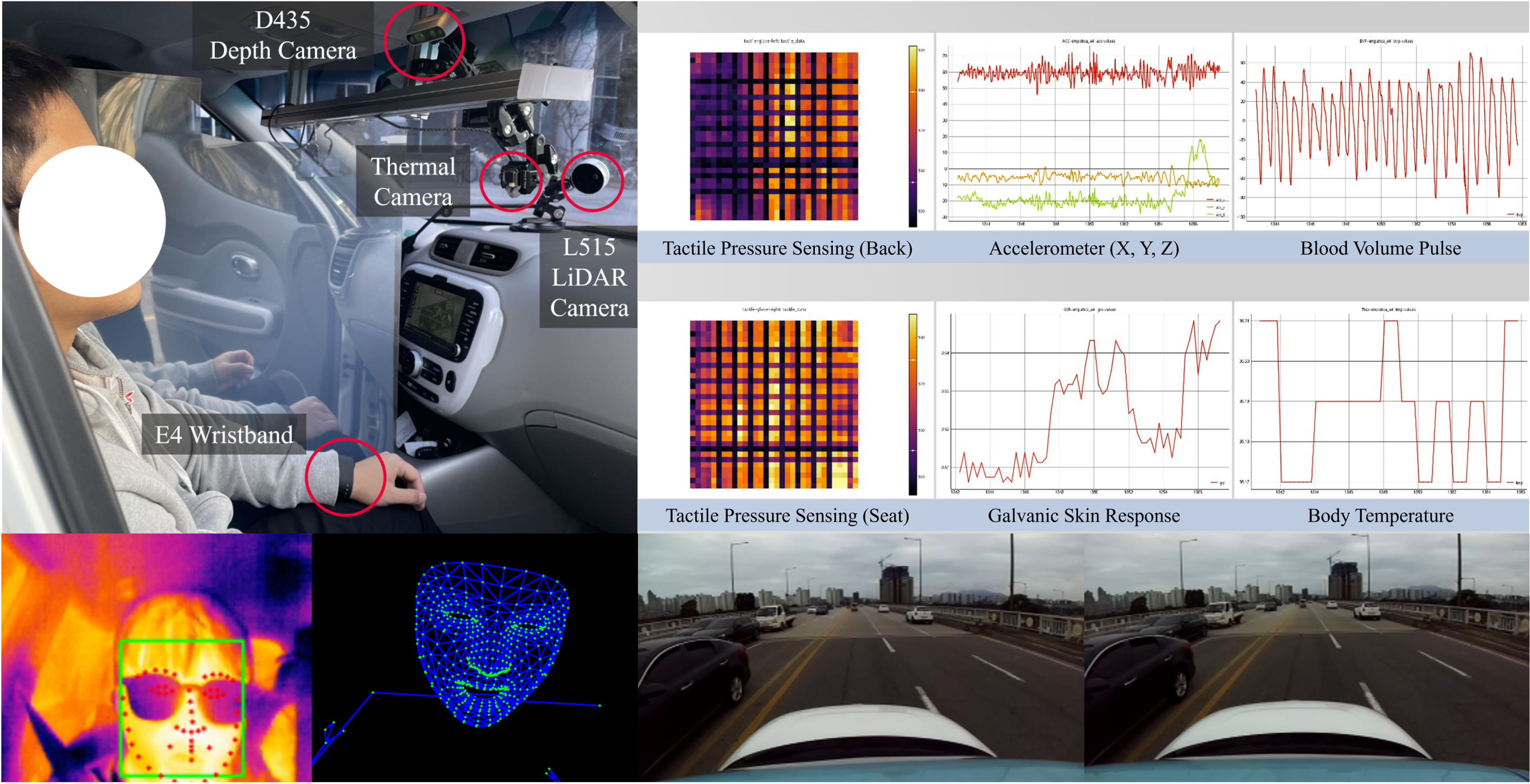

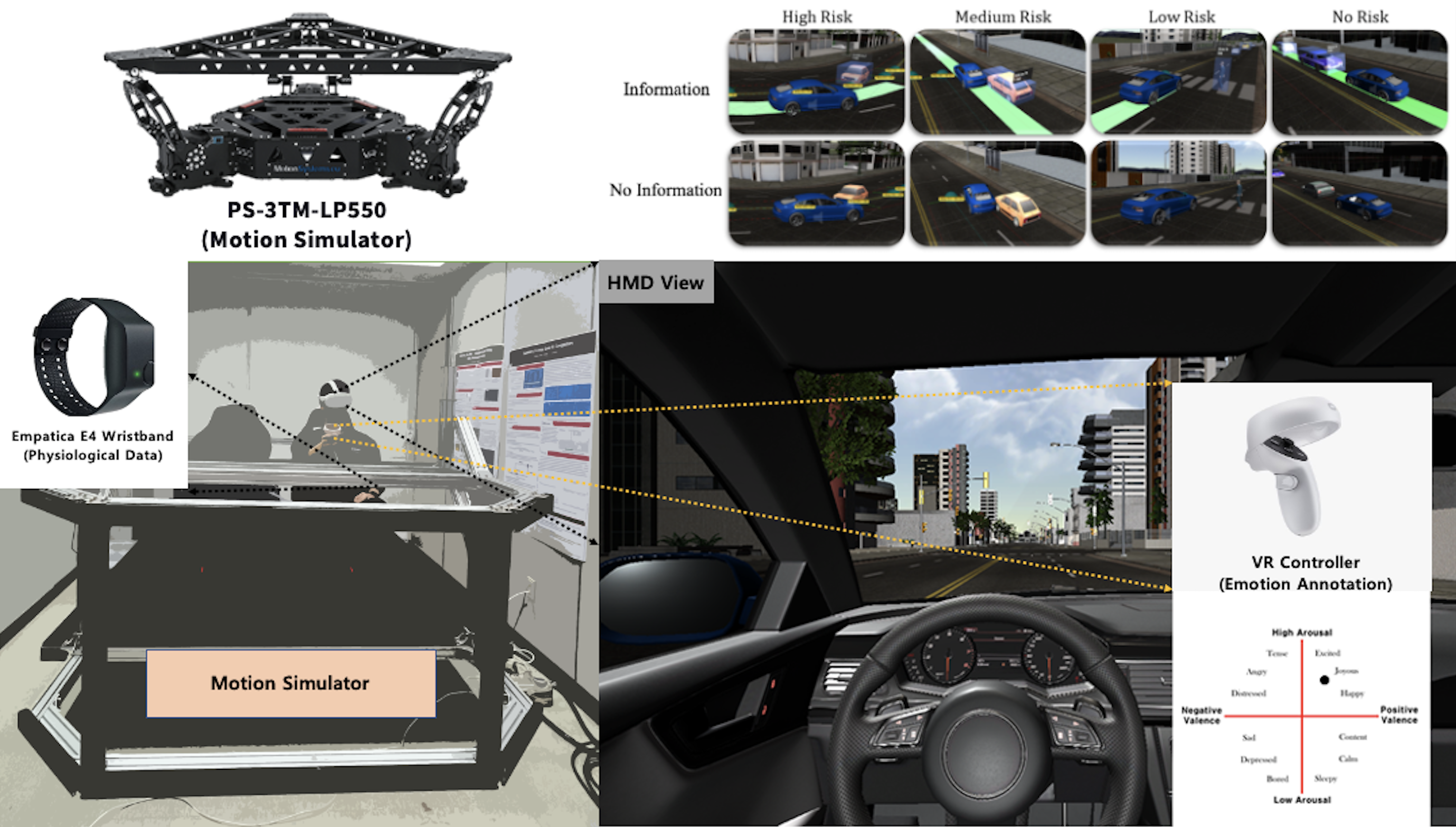

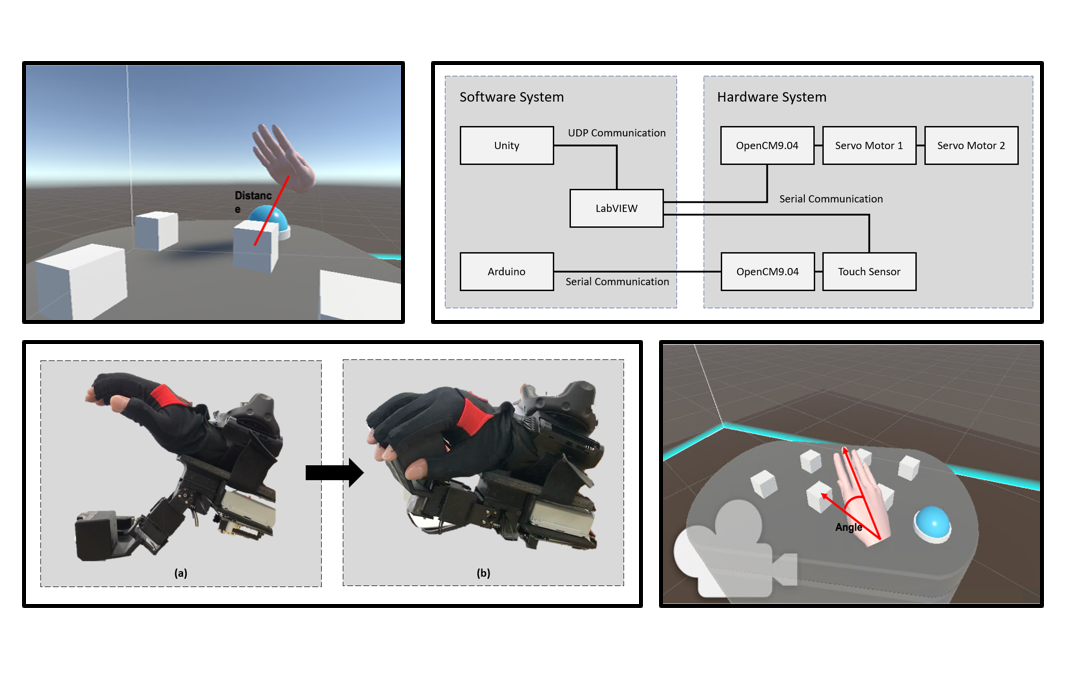

Autonomous Vehicle: Driver behavior sensing and modeling, Risk-aware autonomous driving simulation, XR-based in-vehicle interaction design

You can download my CV here:

.gif)